As a starting point, here's a figure showing recent movements in exchange rates for the United States, Japan, the euro area, Brazil, China, and India. In each panel panel of the figure, the horizontal axis runs from 0 to 36 months. The shaded areas show how much exchange rates typically move over a 36 month period using data from January 1980 through June 2015. The darkest shading for 25th/75th percentile means that exchange rates moved historically within this range from 25-75% of the time. The lighter shading for 10th/90th percentile means that exchange rates move in this area from 10-90% of the time. The blue lines show the actual movement of exchange rates using different but recent starting dates for each country (as shown in the panels). In every case the exchange rate has moved more than the 25th/75th band, and in most cases it is outside the 10th/90th band, too.

As the figure shows, currencies are getting stronger in the US, China, and India, but getting weaker in Japan, the euro area, and Brazil. The IMF describes the patterns this way:

Recent exchange rate movements have been unusually large. The U.S. dollar has appreciated by more than 10 percent in real effective terms since mid-2014. The euro has depreciated by more than 10 percent since early 2014 and the yen by more than 30 percent since mid-2012 ... Such movements, although not unprecedented, are well outside these currencies’ normal fluctuation ranges. Even for emerging market and developing economies, whose currencies typically fluctuate more than those of advanced economies, the recent movements have been unusually large.The report focuses on how movements of exchange rates have historically affected prices of imports and exports (which depends on the extent to which importers and exporters "pass through" the changes in exchange rates as they buy and sell), and in turn what that change in import and export prices means for the trade balance.

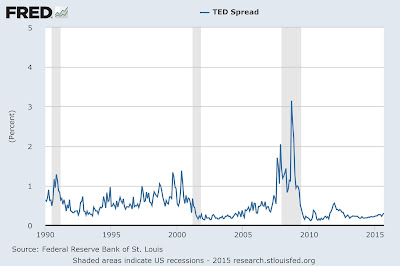

The results imply that, on average, a 10 percent real effective currency depreciation increases import prices by 6.1 percent and reduces export prices. in foreign currency by 5.5 percent ... The estimation results are broadly in line with existing studies for major economies. ... The results suggest that a 10 percent real effective depreciation in an economy’s currency is associated with a rise in real net exports of, on average, 1.5 percent of GDP, with substantial cross-country variation around this average ...The estimates of how movements in exchange rates affect trade seem sensible and mainstream to me, but I confess that I am more intrigued and concerned about how changes in exchange rates can affect the global financial picture. In the past, countries often ran into extreme financial difficulties when they had borrowed extensively in a currency not their own--often in US dollars--and then when the exchange rate moved sharply, they were unable to repay. In the last few years, the governments of most emerging market economies have tried to make sure this would not happen, by keeping their borrowing relatively low and by building up reserves of US dollars to be drawn down if needed.

However, there is some reason for concern that a large share of companies in emerging markets have been taking on a great deal more debt, and because a substantial share of that debt is measured in foreign currency, these firms are increasingly exposed to a risk of shifting exchange rates. A different IMF report, the October 2015 Global Financial Stability Report, looks at this issue in Chapter 3: "Corporate Leverage in Emerging Markets--A Concern?" For a sample of the argument, the report notes:

Corporate debt in emerging market economies has risen significantly during the past decade. The corporate debt of nonfinancial firms across major emerging market economies increased from about $4 trillion in 2004 to well over $18 trillion in 2014 ... The average emerging market corporate debt-to-GDP ratio has also grown by 26 percentage points in the same period, but with notable heterogeneity across countries. ... Leverage has risen relatively more in vulnerable sectors and has tended to be accompanied by worsening firm-level characteristics. For example, higher leverage has been associated with, on average, rising foreign exchange exposures. Moreover, leverage has grown most in the cyclical construction sector, but also in the oil and gas subsector. Funds have largely been used to invest, but there are indications that the quality of investment has declined recently. These findings point to increased vulnerability to changes in global financial conditions and associated capital flow reversals—a point reinforced by the fact that during the 2013 “taper tantrum,” more leveraged firms saw their corporate spreads rise more sharply ...The relatively benign outcome from shifts in exchange rates is that they tweak prices of exports and imports up and down. The deeper concern arises if the movements in exchange rates lead to substantial debt defaults, or to "sudden stop" movements where large flows of international financial capital that had been heading into a country sharply reverse direction. In the last few decades, this mixture of debt problems and sudden shifts in international capital flows changes has been the starting point for national-level financial crises in east Asia, Russia, Latin America, and elsewhere.