Automobile travel transformed how people relate to distance: it decentralized how people live and work, and gave them a new array of choices for everything from the Friday night date to the long-distance road trip. I occasionally marvel that we can take our family of five, with all our gear, door-to-door for a getaway to a YMCA family camp 250 miles away in northern Minnesota--all for the marginal cost of less than a tank of gas. Driverless cars may turn out to be one of those rare inventions that transform transportation even further. KPMG and the nonprofit Center for Automotive Research published a report on "Self-driving cars: The next revolution." last August. It's available from the KPMG website here, and from the CAR website here. I missed the report when it first came out, but then saw this story about it in a recent issue of the Economist magazine.

Many people have heard about the self-driving cars run by Google that have already driven over 200,000 miles on public roads. The report makes clear that automakers are taking this technology very seriously as well, and developing the range of sensor-based and connected-vehicle technologies that would be needed to make this work. Examples of the technology include the Light Detection and Ranging

(LIDAR) equipment that does 360-degree sensing around a car. The LIDAR

systems that Google retrofitted into cars costs about $70,000 per car.

Dedicated Short-Range Communication (DSRC) has certain standards and a

designated frequency for short-range communication, and can thus be

focuses on vehicle-to-vehicle and vehicle-to-infrastructure

communication. Ultimately, these would be tied together so that

self-driving cars could travel closely together in "platoons" and minimize traffic congestion. And it's not just Google and the car companies. Intel Capital, for example, recently launched at $100 million Connected Car Fund.

Of course, it is always possible that driverless cars will run up against insurmountable barriers. But skeptics should remember that the original idea of the automobile looked pretty dicey as well. Millions of adults will be personal driving motorized vehicles at potentially high speeds? They will drive on a network of publicly provided roads that will reach all over the country? They will fill these vehicles with flammable fuel that will be dispensed by tens of thousands of small stores all over the country? If the social gains seem large enough, technologies often have a way of emerging. With driverless cars, what are some of the gains? Quotations are from the report: as usual, footnotes are omitted for readability.

Costs of Traffic Accidents

Self-driving cars have

the potential to save tens of thousands of lives,

and prevent hundreds of thousands of injuries, every year. "In 2010,

there were approximately six million vehicle crashes leading to 32,788

traffic deaths, or approximately 15 deaths per 100,000 people. Vehicle

crashes are the leading cause of death for Americans aged 4–34. And of

the 6 million crashes, 93 percent are attributable to human error. ...

More than 2.3 million adult drivers and passengers were treated in U.S.

emergency rooms in 2009. According to research from the American

Automobile Association (AAA), traffic crashes cost Americans $299.5

billion annually." Moreover, an enormous reduction in crash risk would

allow a redesign of cars to be much lighter.

Costs of Infrastructure

Driverless cars will allow many more cars to use a highway simultaneously. "An essential implication for an autonomous vehicle infrastructure is that, because efficiency will improve so dramatically, traffic capacity will increase exponentially without building additional lanes or roadways. Research indicates that platooning of vehicles could increase highway lane capacity by up to 500 percent. It

may even be possible to convert existing vehicle infrastructure to bicycle or pedestrian uses. Autonomous transportation infrastructure could bring an end to the congested streets and extra-wide highways of large urban areas."

They could reduce the cost of design of highways. "[T]oday’s roadways and supporting infrastructure must accommodate for the imprecise and often-unpredictable movement patterns of human-driven vehicles with extra-wide lanes, guardrails, stop signs, wide shoulders, rumble strips and other features

not required for self-driving, crashless vehicles. Without those accommodations, the United States could significantly reduce the more than $75 billion it spends annually on roads, highways, bridges, and other infrastructure."

Driverless cars will alter the need for parking. Imagine that your car will drop you at your office door, head off to park itself, and come back when you call it. "In his book ReThinking a Lot (2012), Eran Ben-Joseph notes, “In some U.S. cities, parking lots cover more than a third of the land area, becoming the single most salient landscape feature of our built environment.”"

Costs of Time

Driverless cars might be faster, but in addition, they open up the possibility of using travel time for work or relaxation. Your car could become a rolling office, or a place for watching movies, or a place for a nap. "An automated transportation system could not only eliminate most urban congestion, but it would also allow travelers to make productive use of travel time. In 2010, an estimated 86.3 percent of all workers 16 years of age and older commuted to work in a car, truck, or van, and 88.8 percent of those drove alone ... The average commute time in the United States is about 25 minutes.

Thus, on average, approximately 80 percent of the U.S. workforce loses 50 minutes of potential productivity every workday. With convergence, all or part of this time is recoverable. Self-driving vehicles may be customized to serve the needs of the traveler, for example as mobile offices, sleep pods, or entertainment centers." I find myself imagining the overnight road-trip, where instead of driving all day, you sleep in the car and awake at your destination.

Costs of Energy

The combination of much lighter cars, being driven much more efficiently, could dramatically reduce energy use. Lighter cars use less fuel: "Vehicles could also be significantly lighter and more energy

efficient than their human-operated counterparts as they no longer need all the heavy safety features, such as reinforced steel bodies, crumple zones, and airbags. (A 20 percent reduction in weight corresponds to a 20 percent increase in efficiency.)" "Platooning alone, which would reduce the effective drag coefficient on following vehicles, could reduce highway fuel use by up to 20 percent..." "According to a report published by the MIT Media Lab, “In congested urban areas, about 40 percent of total gasoline use is in cars looking for parking."

Costs of Car Ownership

Most cars are unused for 22 hours out of every day. I already know people in cities like New York who own a car, but keep it in storage for out-of-city trips. I know people who use companies like ZipCar, a membership-based service that lets you have a car for a few hours when you need it. Driverless cars may offer a replacement for car ownership. Need a car? A few taps on your smart-phone and one will come to meet you, and take you where you want to be. The price will of course be lower if you don't mind being picked up in an automated carpool.

Mobility for the Young and the Old

Imagine being an elderly person who has become uncomfortable with driving, at least at certain times or under certain conditions. Driverless cars would offer continues mobility. Imagine being able to put your teenager in a car and have them safely delivered to their destination. Imagine always having a safe ride home after a night on the town.

How Fast?

The fully self-driving car isn't right around the corner. Clearly, costs need to come down substantially and a number of complementary technologies need to be created. However, we do already have cars in the commercial market with cruise control and anti-lock brakes, as well as cars that sense potential crash hazards and can parallel park themselves. Changes like these happen slowly, and then in a rush. As the report notes, "The adoption of most new technologies proceeds along an S-curve, and we

believe the path to self-driving vehicles will follow a similar

trajectory." Maybe 10-15 years? Faster?

Pages

▼

Wednesday, October 31, 2012

Tuesday, October 30, 2012

How Retirement Age Tracks Social Security's Rules

Back in 1983, as one of the steps taken to bolster the long-run finances of the Social Security System, was to phase in a rise in the "normal" or "full" retirement age. The normal retirement age for receiving full Social Security benefits had been 65, with "early retirement" with lower benefits possible at age 62. Under the new rules, the normal retirement age remained 65 for those born in 1937 or earlier--and thus turning 65 before 2002. It then phased up by 2 months per year, so that for those born six years later in 1943 or after, the normal retirement age is now 66. Written into law is a follow-up increase where a rise in the normal retirement age from 66 to 67 will be phased in, again at a rate of two months per year, for those born from 1955 to 1960.

How has this change altered actual retirement patterns? What are the reasons, either for retirees or for the finances of Social Security, to encourage still-later retirement?

Economists have long recognized that what a government designates as the "normal" retirement age has a big effect on when people actually choose to retire. Luc Behaghel and David M. Blau present some of the recent evidence in "Framing Social Security Reform: Behavioral Responses

to Changes in the Full Retirement Age," which appears in the November 2012 American Economic Journal: Economic Policy (4(4): 41–67). (The journal isn't freely available on-line, but many in academia will have access through a library subscription.)

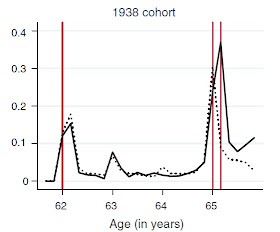

Consider the following graphs from Behaghel and Blau. Each one is for those born in a different year, from 1937 up through 1942, as the normal retirement age phased up. These people are the ones hitting the normal retirement age of 65 in the early and mid-2000s. The solid line shows the probability of retirement at each age. The early retirement age of 62 is marked with a vertical red line; the previous normal retirement age of 65 is marked with a vertical red line; and the actual retirement age for that year as it phases up two months per year is marked with a vertical red line. The dashed line, which is the same in all the figures, shows for comparison the retirement pattern for those born over the 1931-1936 period.

The main striking pattern is that the probability of retiring at a certain age almost exactly tracks the changes in the normal retirement age: that is, the solid line spikes at the red vertical line showing the normal retirement age. There is also a spike at the early retirement age of 62. Here are the patterns.

The evidence here seems clear: People are making their retirement choices in synch with the government-set normal retirement age. This pattern isn't new, as the authors point out, a spike in retirement age at 65 became visible in the data back in the early 1940s, about five years after Social Security became law. Still, the obvious question (for an economist) is why people would make this choice. If you retire later than the normal retirement age, your monthly benefits are scaled up, so from the viewpoint of overall expected lifetime payments, you don't gain from retiring earlier. A number of possible explanations have been proposed: 1) people don't have other sources of income and need to take the retirement benefits as soon as possible for current income; 2) people are myopic, or don't recognize that their monthly benefits would be higher if they delayed retirement; 3) many people are waiting until age 65 to retire so that they can move from their employer health insurance to Medicare; 4) some company retirement plans encourage retiring at age 65.

However, none of these explanations give an obvious reason for why the retirement age would exactly track the changes in Social Security normal retirement age, so it seems as if a final "behavioral" explanation is that the "normal" retirement age announced by the government, whatever it is, is then treated by many people as a recommendation that should be taken. Choosing a retirement date in this way is probably suboptimal both for individuals and for the finances of the Social Security system.

From the standpoint of individuals, there's a widespread sense among economists that many retirees would benefit from having more of their wealth in annuities--that is, an amount that would pay out no matter how long they live. In the Fall 2011 issue of my own Journal of Economic Perspectives,

Shlomo Benartzi, Alessandro Previtero, and Richard H. Thaler have an article on "Annuitization Puzzles," which makes the point that when you delay receiving Social Security, you are in effect buying an annuity: that is, you are taking less in the present--which is similar to "paying" for the annuity-- in exchange for a larger long-term payment in the future. They write: "[T]he easiest way to increase the amount of annuity income that families have is to delay the age at which people start claiming Social Security benefits. Participants are first eligible to start claiming benefits at age 62, but by waiting to begin, the monthly payments increase in an actuarially fair manner until age 70. "

They further argue that a good starting point to encouraging such behavior would be to re-frame the way in which the Social Security Administration, and all the rest of us, talk about Social Security benefits. Imagine that, with no change at all in the current law, we all started talking about a "standard retirement age" of 70. We pointed out that you can retire earlier, but if you do, monthly benefits will be lower. If the choice of when to retire was framed in this way, my strong suspicion is that many more people would react differently than when we announce that the "normal retirement age" is 66, and if you wait then your monthly benefits will be higher. Again, people seem to react to what the government designates as the target for retirement age.

However, this labeling change might encourage people to work longer, but it would not affect the solvency of the Social Security system, because those who wait longer to retire are, in effect, paying for their own higher monthly benefits by delaying the receipt of those benefits. However, the Social Security actuaries offer a number of illustrative calculations on their website about possible steps to bolster the financing of the system. One proposal about phasing back the normal age of retirement looks like this: "After the normal retirement age (NRA) reaches 67 for those age 62 in 2022, increase the NRA 2 months per year until it reaches 69 for individuals attaining age 62 in 2034. Thereafter, increase the NRA 1 month every 2 years."

Thus, this proposal would represent no change in the rules for Social Security benefits for anyone born before 1960--and thus in their early 50s at present. Under this proposal, those born after 1960 would face the gradual phase-in--but of course, they would also benefit from having a program that is much closer to fully funded. would face the same phase-in as currently exists. The actuaries estimate that this step by itself would address about 44% of the gap over the next 75 years between what Social Security has promised and the funding that is expected during that time. Given the predicted shortfalls of the Social Security system in the future, and the gains in life expectancy both in the last few decades and expected in the next few decades, and the parlous condition of large budget deficits reaching into the future, I would be open to proposals to phase in a more rapid and more sustained rise in the normal retirement age for Social Security benefits.

How has this change altered actual retirement patterns? What are the reasons, either for retirees or for the finances of Social Security, to encourage still-later retirement?

Economists have long recognized that what a government designates as the "normal" retirement age has a big effect on when people actually choose to retire. Luc Behaghel and David M. Blau present some of the recent evidence in "Framing Social Security Reform: Behavioral Responses

to Changes in the Full Retirement Age," which appears in the November 2012 American Economic Journal: Economic Policy (4(4): 41–67). (The journal isn't freely available on-line, but many in academia will have access through a library subscription.)

Consider the following graphs from Behaghel and Blau. Each one is for those born in a different year, from 1937 up through 1942, as the normal retirement age phased up. These people are the ones hitting the normal retirement age of 65 in the early and mid-2000s. The solid line shows the probability of retirement at each age. The early retirement age of 62 is marked with a vertical red line; the previous normal retirement age of 65 is marked with a vertical red line; and the actual retirement age for that year as it phases up two months per year is marked with a vertical red line. The dashed line, which is the same in all the figures, shows for comparison the retirement pattern for those born over the 1931-1936 period.

The main striking pattern is that the probability of retiring at a certain age almost exactly tracks the changes in the normal retirement age: that is, the solid line spikes at the red vertical line showing the normal retirement age. There is also a spike at the early retirement age of 62. Here are the patterns.

The evidence here seems clear: People are making their retirement choices in synch with the government-set normal retirement age. This pattern isn't new, as the authors point out, a spike in retirement age at 65 became visible in the data back in the early 1940s, about five years after Social Security became law. Still, the obvious question (for an economist) is why people would make this choice. If you retire later than the normal retirement age, your monthly benefits are scaled up, so from the viewpoint of overall expected lifetime payments, you don't gain from retiring earlier. A number of possible explanations have been proposed: 1) people don't have other sources of income and need to take the retirement benefits as soon as possible for current income; 2) people are myopic, or don't recognize that their monthly benefits would be higher if they delayed retirement; 3) many people are waiting until age 65 to retire so that they can move from their employer health insurance to Medicare; 4) some company retirement plans encourage retiring at age 65.

However, none of these explanations give an obvious reason for why the retirement age would exactly track the changes in Social Security normal retirement age, so it seems as if a final "behavioral" explanation is that the "normal" retirement age announced by the government, whatever it is, is then treated by many people as a recommendation that should be taken. Choosing a retirement date in this way is probably suboptimal both for individuals and for the finances of the Social Security system.

From the standpoint of individuals, there's a widespread sense among economists that many retirees would benefit from having more of their wealth in annuities--that is, an amount that would pay out no matter how long they live. In the Fall 2011 issue of my own Journal of Economic Perspectives,

Shlomo Benartzi, Alessandro Previtero, and Richard H. Thaler have an article on "Annuitization Puzzles," which makes the point that when you delay receiving Social Security, you are in effect buying an annuity: that is, you are taking less in the present--which is similar to "paying" for the annuity-- in exchange for a larger long-term payment in the future. They write: "[T]he easiest way to increase the amount of annuity income that families have is to delay the age at which people start claiming Social Security benefits. Participants are first eligible to start claiming benefits at age 62, but by waiting to begin, the monthly payments increase in an actuarially fair manner until age 70. "

They further argue that a good starting point to encouraging such behavior would be to re-frame the way in which the Social Security Administration, and all the rest of us, talk about Social Security benefits. Imagine that, with no change at all in the current law, we all started talking about a "standard retirement age" of 70. We pointed out that you can retire earlier, but if you do, monthly benefits will be lower. If the choice of when to retire was framed in this way, my strong suspicion is that many more people would react differently than when we announce that the "normal retirement age" is 66, and if you wait then your monthly benefits will be higher. Again, people seem to react to what the government designates as the target for retirement age.

However, this labeling change might encourage people to work longer, but it would not affect the solvency of the Social Security system, because those who wait longer to retire are, in effect, paying for their own higher monthly benefits by delaying the receipt of those benefits. However, the Social Security actuaries offer a number of illustrative calculations on their website about possible steps to bolster the financing of the system. One proposal about phasing back the normal age of retirement looks like this: "After the normal retirement age (NRA) reaches 67 for those age 62 in 2022, increase the NRA 2 months per year until it reaches 69 for individuals attaining age 62 in 2034. Thereafter, increase the NRA 1 month every 2 years."

Thus, this proposal would represent no change in the rules for Social Security benefits for anyone born before 1960--and thus in their early 50s at present. Under this proposal, those born after 1960 would face the gradual phase-in--but of course, they would also benefit from having a program that is much closer to fully funded. would face the same phase-in as currently exists. The actuaries estimate that this step by itself would address about 44% of the gap over the next 75 years between what Social Security has promised and the funding that is expected during that time. Given the predicted shortfalls of the Social Security system in the future, and the gains in life expectancy both in the last few decades and expected in the next few decades, and the parlous condition of large budget deficits reaching into the future, I would be open to proposals to phase in a more rapid and more sustained rise in the normal retirement age for Social Security benefits.

Monday, October 29, 2012

Time Watching Television

I recently ran across this historical data from the Neilson company for the time American households spend watching television, per day.

I rarely watch 8 hours of television per week, much less per day. I had a conversation the other day in which someone was incredulous that I have never seen an episode of Seinfeld, or Friends, or actually any sitcom in the last decade or so. I told them that I used to watch M*A*S*H now and then, and they looked at me with pity.

Economists sometimes quote the old proverb: "De gustibus non est disputandum." There's no arguing over taste. We tend to accept consumer tastes and preferences as given, and proceed from there. I suppose that those of us who blog, and then hope for readers, can't really complain about those who spend time looking at a screen. I certainly have my own personal time-wasters, like reading an inordinate number of mysteries. I assume that for many people the television is on in the background of other activities. But at some deep level, I just don't understand averaging 8 hours of television per day. I always remember the long-ago jibe from the old radio comedian Fred Allen: "Television is a medium because anything well done is rare."

Note: Thanks to Danlu Hu for downloading the data and creating this figure.

I rarely watch 8 hours of television per week, much less per day. I had a conversation the other day in which someone was incredulous that I have never seen an episode of Seinfeld, or Friends, or actually any sitcom in the last decade or so. I told them that I used to watch M*A*S*H now and then, and they looked at me with pity.

Economists sometimes quote the old proverb: "De gustibus non est disputandum." There's no arguing over taste. We tend to accept consumer tastes and preferences as given, and proceed from there. I suppose that those of us who blog, and then hope for readers, can't really complain about those who spend time looking at a screen. I certainly have my own personal time-wasters, like reading an inordinate number of mysteries. I assume that for many people the television is on in the background of other activities. But at some deep level, I just don't understand averaging 8 hours of television per day. I always remember the long-ago jibe from the old radio comedian Fred Allen: "Television is a medium because anything well done is rare."

Note: Thanks to Danlu Hu for downloading the data and creating this figure.

Friday, October 26, 2012

Would Inflation Help Cut Government Debt?

When teaching about the effects of an unexpected surge of inflation, I always point out that those who borrowed at a fixed rate of interest benefit from the inflation, because they can repay their borrowing in inflated (and less valuable) dollars. And sometimes I toss in the mock-cheerful reminder that the U.S. government is the single biggest borrower--and thus presumably has a vested interest in a higher rate of inflation. But presuming an easy connection from higher inflation to reduced government debt burdens is actually a more problematic policy than it may at first appear.

Menzie Chinn and Jeffry Frieden make a lucid case for how higher inflation could ease the way to a lower real debt burden in an essay in the Milken Institute Review (available on-line with free registration). They point out that after World War II the U.S. government had accumulated a total debt of more than 100% of GDP, but that it cut that debt/GDP burden in half in about 10 years with a combination of economic growth and about 4% inflation. They are at pains to point out that they aren't suggesting a lot of inflation. But as they see it, given the fact that debt/GDP ratios are extremely high by historical standards in the U.S. and in a number of other high-income countries, a quiet process of slowing inflating away some of the real value of the debt is far preferable to the messy process of governments threatening to default. They write:

"Creditors, of course, receive less in real terms than they had contracted for – and probably less than they expected when they agreed to the contract. That may seem unfair. But the outcome is little different than what happens to creditors when they are forced to accept the restructuring of their claims through one form of bankruptcy or another. ...It’s important to remember, though, that

we are not suggesting a lot of inflation – certainly nothing like the double-digit rates that

followed the second oil shock in 1979 to 1981. Rather, we believe the goal should be to target

moderate inflation, only enough to reduce the debt burden to more manageable levels,

and adjust monetary policy accordingly. This probably means something in the 4 to 6 percent

range for several years. ... We’re not claiming that inflation is a painless way to speed deleveraging. We are claiming, though, that it is less painful than the realistic alternatives. ... Unusual times call for unusual measures."

The counterargument, which holds that inflation may not do much to reduce debt/GDP ratios, starts from this insight: Yes, inflation reduces the outstanding value of past debt, and in a situation like the aftermath of World War II when large debts were incurred, but the borrowing then stops. For example, the U.S. government ran budget surpluses four out of the five years from 1947-1951. But if fiscal policy is on an unsustainable path of overly large deficits, then inflation isn't going to fix the problem. In an essay appearing in the Annual Report of the Federal Reserve Bank of Richmond, "Unsustainable Fiscal Policy:Implications for Monetary Policy," Renee Haltom and John A. Weinberg make an argument that inflation would not actually offer much hope of reducing the current U.S government debt burden.

In short, if federal deficits are first definitively placed on a diminishing path, then a quiet surge of unexpected inflation could help in reducing the past debts. But on the current U.S. trajectory of a steadily-rising debt/GDP ratio over the next few decades, inflation isn't the answer--and could end up just being another part of the problem.

Menzie Chinn and Jeffry Frieden make a lucid case for how higher inflation could ease the way to a lower real debt burden in an essay in the Milken Institute Review (available on-line with free registration). They point out that after World War II the U.S. government had accumulated a total debt of more than 100% of GDP, but that it cut that debt/GDP burden in half in about 10 years with a combination of economic growth and about 4% inflation. They are at pains to point out that they aren't suggesting a lot of inflation. But as they see it, given the fact that debt/GDP ratios are extremely high by historical standards in the U.S. and in a number of other high-income countries, a quiet process of slowing inflating away some of the real value of the debt is far preferable to the messy process of governments threatening to default. They write:

"Creditors, of course, receive less in real terms than they had contracted for – and probably less than they expected when they agreed to the contract. That may seem unfair. But the outcome is little different than what happens to creditors when they are forced to accept the restructuring of their claims through one form of bankruptcy or another. ...It’s important to remember, though, that

we are not suggesting a lot of inflation – certainly nothing like the double-digit rates that

followed the second oil shock in 1979 to 1981. Rather, we believe the goal should be to target

moderate inflation, only enough to reduce the debt burden to more manageable levels,

and adjust monetary policy accordingly. This probably means something in the 4 to 6 percent

range for several years. ... We’re not claiming that inflation is a painless way to speed deleveraging. We are claiming, though, that it is less painful than the realistic alternatives. ... Unusual times call for unusual measures."

The counterargument, which holds that inflation may not do much to reduce debt/GDP ratios, starts from this insight: Yes, inflation reduces the outstanding value of past debt, and in a situation like the aftermath of World War II when large debts were incurred, but the borrowing then stops. For example, the U.S. government ran budget surpluses four out of the five years from 1947-1951. But if fiscal policy is on an unsustainable path of overly large deficits, then inflation isn't going to fix the problem. In an essay appearing in the Annual Report of the Federal Reserve Bank of Richmond, "Unsustainable Fiscal Policy:Implications for Monetary Policy," Renee Haltom and John A. Weinberg make an argument that inflation would not actually offer much hope of reducing the current U.S government debt burden.

"It is useful to consider how much inflation would be required to adequately reduce current debt levels. ... To consider how much inflation would be required today to address current debt imbalances, Michael Krause and Stéphane Moyen (2011) estimate that a moderate rise in inflation to 4 percent annually sustained for at least 10 years—in effect a permanent doubling of the Fed’s inflation objective—would reduce the value of the additional debt that accrued during the 2008–09 financial crisis, not the total debt, by just 25 percent. If the rise in inflation lasted only two or three years, a 16 percentage point increase—from roughly 2 percent inflation today to 18 percent—would be required to reduce that additional debt by just 3 percent to 8 percent. Such inflation rates were not reached even in the worst days of the inflationary 1970s. The reason inflation has such a minimal impact on debt in Krause and Moyen’s estimates is that while inflation erodes the value of existing nominal debt, it increases the financing costs for newly issued debt because investors must be compensated to be willing to hold bonds that will be subject to higher inflation. This effect would be greater for governments such as the United States that have a short average maturity of government debt and therefore need to reissue it often.In a similar spirit, the IMF wrote in Chapter 3 of its most recent World Economic Outlook that inflation at low levels often seems to have little effect in reducing government debt: "The relationship between inflation and [government] debt reduction is more ambiguous. Although hyperinflation is clearly associated with sharp debt reduction, when hyperinflation episodes are excluded, there is no clear association between the average inflation rate and the change in debt."

"With these estimates in mind, it is worth recalling the CBO’s projection that debt held by the public may triple as a percent of GDP within 25 years. The estimates cited above suggest that inflation is simply not a viable strategy for reducing such debt levels. In addition, it is important to remember that inflation is costly on many levels. Inflation high enough to significantly erode the debt would inflict considerable damage on the economy and would require costly policies for the Fed to regain its credibility after the fact. Inflation that was engineered specifically to erode debt would provide a significant source of fiscal revenue without approval via the democratic process, and so would

raise questions about the role of the central bank as opposed to the roles of Congress and the executive branch in raising fiscal revenues. Ultimately, the solution to high debt levels must come from fiscal authorities."

In short, if federal deficits are first definitively placed on a diminishing path, then a quiet surge of unexpected inflation could help in reducing the past debts. But on the current U.S. trajectory of a steadily-rising debt/GDP ratio over the next few decades, inflation isn't the answer--and could end up just being another part of the problem.

Thursday, October 25, 2012

Can the Cure for Cancer Be Securitized?

The October 2012 issue of Nature Biotechnology offers several articles on the theme of "Commercializing biomedical innovations." The opening "Editorial" sets the stage this way: "Investment in biomedical innovation is not what it once was. Millions of dollars have fled the life sciences risk capital pool. The number of early venture deals in biotech is smaller than ever. Public markets are all but closed, biotech-pharma deals increasingly back-loaded with contingent,

rather than upfront, payments. Paths to market are more winding and stonier. Government cuts are closing laboratories and culling blue-sky research. Never has there been a more pressing need to look beyond the existing pools of funding and talent to galvanize biomedical innovation."

Thus, the papers look at a variety of interactions: interactions between universities and the biomed industry; different business models for biomed firms; how venture capital firms often seem to enter biomed start-ups "too early," well before a commercial payoff can be expected; funding research through nonprofit foundations that promote free dissemination of any findings; and others. But my eye was particularly caught by a proposal by caught by three economists, Jose-Maria Fernandez, Roger M. Stein and Andrew W. Lo, who offer a proposal for "Commercializing biomedical research through securitization techniques."

These authors point out a paradoxical situation in biomedical research. On one side, the research journals and even the news media are full of breakthrough developments, "including gene therapies for previously incurable rare diseases, molecularly targeted oncology drugs, new modes of medical imaging and radiosurgery, biomarkers for drug response or for such diseases as prostate cancer and heart disease, and the use of human genome sequencing to find treatments for diseases that have confounded conventional medicine, not to mention advances in bioinformatics and computing power that have enabled many of these applications."

On the other side, the existing business structures for translating these developments into new products doesn't seem to be working well. "Consensus is growing that the bench-to-bedside process of translating biomedical research into effective therapeutics is broken. ... The productivity of big pharmaceutical companies—as measured by the number of new molecular entity and biologic license applications per dollar of R&D investment—has declined in recent years ... Life sciences venture-capital investments have not fared much better, with an average internal rate of return of −1% over the 10-year period from 2001 through 2010 ..."

Fernandez, Stein, and Lo suggest that the fundamental problem is that the technological breakthroughs present a vast array of possibilities, but these possibilities are complex and costly to pursue. A large portfolio of new biomed innovations is probably, overall, a money-maker. But when firms need to think about pursuing just a few of the many possibilities, at great cost, they may often decide not to do so. They write:

"The traditional quarterly earnings cycle, real-time pricing and dispersed ownership of public equities imply constant scrutiny of corporate performance from many different types of shareholders, all pushing senior management toward projects and strategies with clearer and more immediate payoffs, and away from more speculative but potentially transformative science and translational research. ... Industry professionals cite the existence of a ‘valley of death’—a funding gap between basic biomedical research and clinical development. For example, in 2010, only $6–7 billion was spent on translational efforts, whereas $48 billion was spent on basic research and $127 billion was spent on clinical development that same year."

What's their alternative? " We propose an alternative for funding biomedical innovation that addresses these issues through the use of ‘financial engineering’... Our approach involves two components: (i) creating large diversified portfolios—‘megafunds’ on the order of $5–30 billion—of biomedical projects at all stages of development; and (ii) structuring the financing for these portfolios as combinations of equity and securitized debt so as to access much larger sources of investment capital. These two components are inextricably intertwined: diversification within a single entity reduces risk to such an extent that the entity can raise assets by issuing both debt and equity, and the much larger capacity of debt markets makes this diversification possible for multi-billion-dollar portfolios of many expensive and highly risky projects. ... In a simulation using historical data for new molecular entities in oncology from 1990 to 2011, we find that megafunds of $5–15 billion may yield average investment returns of 8.9–11.4% for equity holders and 5–8% for ‘research-backed obligation’ holders, which are lower than typical venture-capital hurdle rates but attractive to pension funds, insurance companies and other large institutional investors.

Frankly, I have no clear idea about whether the Fernandez, Stein, and Lo approach raising money for biomed companies is viable. One never knows in advance whether an innovation will function well and fulfill real-world needs, whether that innovation is financial or real. But if the markets can put together this sort of deal, it might offer an enormous boost to the process of translating biomedical innovation into actual health care products. In the aftermath of the Great Recession, the words "financial innovation" are often spoken with a heavy dose of sarcasm, as if all we need for a 21st-century economy is good old passbook savings accounts. But financial innovation like this Fernandez, Stein and Lo proposal is an example of how financial innovation might save lives by addressing an important real-world problem. This financial innovation seems well worth someone trying it out--with the proviso that if it doesn't work, no one gets bailed out!

rather than upfront, payments. Paths to market are more winding and stonier. Government cuts are closing laboratories and culling blue-sky research. Never has there been a more pressing need to look beyond the existing pools of funding and talent to galvanize biomedical innovation."

Thus, the papers look at a variety of interactions: interactions between universities and the biomed industry; different business models for biomed firms; how venture capital firms often seem to enter biomed start-ups "too early," well before a commercial payoff can be expected; funding research through nonprofit foundations that promote free dissemination of any findings; and others. But my eye was particularly caught by a proposal by caught by three economists, Jose-Maria Fernandez, Roger M. Stein and Andrew W. Lo, who offer a proposal for "Commercializing biomedical research through securitization techniques."

These authors point out a paradoxical situation in biomedical research. On one side, the research journals and even the news media are full of breakthrough developments, "including gene therapies for previously incurable rare diseases, molecularly targeted oncology drugs, new modes of medical imaging and radiosurgery, biomarkers for drug response or for such diseases as prostate cancer and heart disease, and the use of human genome sequencing to find treatments for diseases that have confounded conventional medicine, not to mention advances in bioinformatics and computing power that have enabled many of these applications."

On the other side, the existing business structures for translating these developments into new products doesn't seem to be working well. "Consensus is growing that the bench-to-bedside process of translating biomedical research into effective therapeutics is broken. ... The productivity of big pharmaceutical companies—as measured by the number of new molecular entity and biologic license applications per dollar of R&D investment—has declined in recent years ... Life sciences venture-capital investments have not fared much better, with an average internal rate of return of −1% over the 10-year period from 2001 through 2010 ..."

Fernandez, Stein, and Lo suggest that the fundamental problem is that the technological breakthroughs present a vast array of possibilities, but these possibilities are complex and costly to pursue. A large portfolio of new biomed innovations is probably, overall, a money-maker. But when firms need to think about pursuing just a few of the many possibilities, at great cost, they may often decide not to do so. They write:

"The traditional quarterly earnings cycle, real-time pricing and dispersed ownership of public equities imply constant scrutiny of corporate performance from many different types of shareholders, all pushing senior management toward projects and strategies with clearer and more immediate payoffs, and away from more speculative but potentially transformative science and translational research. ... Industry professionals cite the existence of a ‘valley of death’—a funding gap between basic biomedical research and clinical development. For example, in 2010, only $6–7 billion was spent on translational efforts, whereas $48 billion was spent on basic research and $127 billion was spent on clinical development that same year."

What's their alternative? " We propose an alternative for funding biomedical innovation that addresses these issues through the use of ‘financial engineering’... Our approach involves two components: (i) creating large diversified portfolios—‘megafunds’ on the order of $5–30 billion—of biomedical projects at all stages of development; and (ii) structuring the financing for these portfolios as combinations of equity and securitized debt so as to access much larger sources of investment capital. These two components are inextricably intertwined: diversification within a single entity reduces risk to such an extent that the entity can raise assets by issuing both debt and equity, and the much larger capacity of debt markets makes this diversification possible for multi-billion-dollar portfolios of many expensive and highly risky projects. ... In a simulation using historical data for new molecular entities in oncology from 1990 to 2011, we find that megafunds of $5–15 billion may yield average investment returns of 8.9–11.4% for equity holders and 5–8% for ‘research-backed obligation’ holders, which are lower than typical venture-capital hurdle rates but attractive to pension funds, insurance companies and other large institutional investors.

Frankly, I have no clear idea about whether the Fernandez, Stein, and Lo approach raising money for biomed companies is viable. One never knows in advance whether an innovation will function well and fulfill real-world needs, whether that innovation is financial or real. But if the markets can put together this sort of deal, it might offer an enormous boost to the process of translating biomedical innovation into actual health care products. In the aftermath of the Great Recession, the words "financial innovation" are often spoken with a heavy dose of sarcasm, as if all we need for a 21st-century economy is good old passbook savings accounts. But financial innovation like this Fernandez, Stein and Lo proposal is an example of how financial innovation might save lives by addressing an important real-world problem. This financial innovation seems well worth someone trying it out--with the proviso that if it doesn't work, no one gets bailed out!

Wednesday, October 24, 2012

When Rent Control Ended in Cambridge, Mass.

Every intro class teaches about price ceilings, and I suspect that 99% of them use rent control laws as an example. Of course, the standard lesson from a supply-and-demand diagram is that price ceilings lead to a situation where the quantity demanded exceeds the quantity supplied, and so while the price of rent-controlled apartments is lower, good luck in finding a vacancy!

The slightly more sophisticated insight is what I call in my own intro textbook the problem of "many margins for action." (Of course, if you are teaching an intro econ class, I encourage you to take a look at my Principles of Economics textbook, a high quality and lower-cost alternative to the big publishers, available here.) Landlords who face rent control legislation can skimp on maintenance, or hunt for ways to force the renter to bear additional fees or costs. If a large number of landlords act in this way, the feeling of the neighborhood and property values for homes that are not rentals may be affected, too.

Cambridge, Massachusetts, has a rent control law in place from 1970 to 1994. It was ended by a statewide vote that barely squeaked out a 51%-49% majority--and ended despite the fact that Cambridge residents favored the continuation of the law by a 60%-40% majority. The law placed limits on rents for all rental properties in Cambridge built in 1969 or earlier. In "Housing Market Spillovers: Evidence from the End of Rent Control in Cambridge, Massachusetts," David H. Autor, Christopher J. Palmer, and Parag A. Pathak look at what happened. (The paper is published as NBER Working Paper 18125. These working papers are not freely available on-line, but many in academia will have access through institutional memberships. Full disclosure: David Autor is editor of my own Journal of Economic Perspectives, and thus my boss.) Autor, Palmer, and Pathak have data on rents and prices in both controlled rental buildings, uncontrolled rental buildings, and owner-occupied housing. They can also make comparisons to neighboring suburbs that did not have rent controls in place. Here are a few of their more striking findings:

-- The rent-controlled buildings in Cambridge, Mass., typically had rents 25%-40% below the level of uncontrolled rental buildings nearby. However, the maintenance of rent-controlled building was often subpar, with a higher incidence of issues like holes in walls or floors, chipped or peeling paint, loose railings, and the like. More broadly, owners of rent-controlled properties had no incentive to do any major fix-ups or renovations, because they would be unable to recoup the costs.

-- Rent control laws are still easy to find, if not exactly widespread, in the United States. For example, "New York City’s system of rent regulation affects at least one million apartments, while cities such as San Francisco, Los Angeles, Washington DC, and many towns in California and New Jersey have various forms of rent regulation."

-- Not surprisingly, the end of rent control in 1995 meant that prices of the buildings that had formerly been rent-controlled rose. "Our statistical analysis also indicates that rent controlled properties were valued at a discount of about 50 percent relative to never-controlled properties with comparable characteristics in the same neighborhoods during the rent control era, and that the assessed values of these properties increased by approximately 18 to 25 percent after rent control ended."

-- More surprising, it turns out that the end of rent control raised the value of all the non-controlled properties in Cambridge, too. Properties that were in a neighborhood with a higher percentage of rent-controlled properties increased in value by more than those in neighborhoods with a lower percentage of rent-controlled properties. Indeed, when rent control ended, the gains to owners of uncontrolled properties were greater in total than the gains to the owners of rent-controlled properties. "The economic magnitude of the effect of rent control removal on the value of Cambridge’s housing stock is $1.8 billion. We calculate that positive spillovers from decontrol added $1.0 billion to the value of the never-controlled housing stock in Cambridge, equal to 10 percent of its total value and one-sixth of its appreciation between 1994 and 2004. Notably, direct effects on decontrolled properties are smaller than the spillovers. We estimate that rent control removal raised the value of decontrolled properties by $770 million, which is 25 percent less than the spillover effect."

Taking all of this together, it seems to me like the way to think about rent control--at least in the form that it was enacted in Cambridge, Mass.-- is that it creates a situation of low-quality and poorly-maintained housing stock, which then rents for less than uncontrolled properties. If the goal of public policy is to create lower-quality and more affordable housing, there are other ways to accomplish that goal. For example, zoning laws could require that rental complexes include a mixture of regular and small-sized rental apartments, so that the small-sized (and thus "lower quality") apartments would rent for less. Or those with lower incomes could just receive housing vouchers.

But when rent control is enacted in a way that leads to degradation of a substantial portion of the housing stock, the costs are not just carried by landlords of those rent-controlled apartments. In fact, a majority of the costs may be as a result of spillover effects to real estate that isn't rent-controlled. When a substantial proportion of the houses in a neighborhood are not well-maintained, everyone's housing prices will suffer.

The slightly more sophisticated insight is what I call in my own intro textbook the problem of "many margins for action." (Of course, if you are teaching an intro econ class, I encourage you to take a look at my Principles of Economics textbook, a high quality and lower-cost alternative to the big publishers, available here.) Landlords who face rent control legislation can skimp on maintenance, or hunt for ways to force the renter to bear additional fees or costs. If a large number of landlords act in this way, the feeling of the neighborhood and property values for homes that are not rentals may be affected, too.

Cambridge, Massachusetts, has a rent control law in place from 1970 to 1994. It was ended by a statewide vote that barely squeaked out a 51%-49% majority--and ended despite the fact that Cambridge residents favored the continuation of the law by a 60%-40% majority. The law placed limits on rents for all rental properties in Cambridge built in 1969 or earlier. In "Housing Market Spillovers: Evidence from the End of Rent Control in Cambridge, Massachusetts," David H. Autor, Christopher J. Palmer, and Parag A. Pathak look at what happened. (The paper is published as NBER Working Paper 18125. These working papers are not freely available on-line, but many in academia will have access through institutional memberships. Full disclosure: David Autor is editor of my own Journal of Economic Perspectives, and thus my boss.) Autor, Palmer, and Pathak have data on rents and prices in both controlled rental buildings, uncontrolled rental buildings, and owner-occupied housing. They can also make comparisons to neighboring suburbs that did not have rent controls in place. Here are a few of their more striking findings:

-- The rent-controlled buildings in Cambridge, Mass., typically had rents 25%-40% below the level of uncontrolled rental buildings nearby. However, the maintenance of rent-controlled building was often subpar, with a higher incidence of issues like holes in walls or floors, chipped or peeling paint, loose railings, and the like. More broadly, owners of rent-controlled properties had no incentive to do any major fix-ups or renovations, because they would be unable to recoup the costs.

-- Rent control laws are still easy to find, if not exactly widespread, in the United States. For example, "New York City’s system of rent regulation affects at least one million apartments, while cities such as San Francisco, Los Angeles, Washington DC, and many towns in California and New Jersey have various forms of rent regulation."

-- Not surprisingly, the end of rent control in 1995 meant that prices of the buildings that had formerly been rent-controlled rose. "Our statistical analysis also indicates that rent controlled properties were valued at a discount of about 50 percent relative to never-controlled properties with comparable characteristics in the same neighborhoods during the rent control era, and that the assessed values of these properties increased by approximately 18 to 25 percent after rent control ended."

-- More surprising, it turns out that the end of rent control raised the value of all the non-controlled properties in Cambridge, too. Properties that were in a neighborhood with a higher percentage of rent-controlled properties increased in value by more than those in neighborhoods with a lower percentage of rent-controlled properties. Indeed, when rent control ended, the gains to owners of uncontrolled properties were greater in total than the gains to the owners of rent-controlled properties. "The economic magnitude of the effect of rent control removal on the value of Cambridge’s housing stock is $1.8 billion. We calculate that positive spillovers from decontrol added $1.0 billion to the value of the never-controlled housing stock in Cambridge, equal to 10 percent of its total value and one-sixth of its appreciation between 1994 and 2004. Notably, direct effects on decontrolled properties are smaller than the spillovers. We estimate that rent control removal raised the value of decontrolled properties by $770 million, which is 25 percent less than the spillover effect."

Taking all of this together, it seems to me like the way to think about rent control--at least in the form that it was enacted in Cambridge, Mass.-- is that it creates a situation of low-quality and poorly-maintained housing stock, which then rents for less than uncontrolled properties. If the goal of public policy is to create lower-quality and more affordable housing, there are other ways to accomplish that goal. For example, zoning laws could require that rental complexes include a mixture of regular and small-sized rental apartments, so that the small-sized (and thus "lower quality") apartments would rent for less. Or those with lower incomes could just receive housing vouchers.

But when rent control is enacted in a way that leads to degradation of a substantial portion of the housing stock, the costs are not just carried by landlords of those rent-controlled apartments. In fact, a majority of the costs may be as a result of spillover effects to real estate that isn't rent-controlled. When a substantial proportion of the houses in a neighborhood are not well-maintained, everyone's housing prices will suffer.

Tuesday, October 23, 2012

What's Good for General Motors ...

As President Obama and Mitt Romney jostled back and forth about the bailout of General Motors and Chrysler during the debate last night, I was naturally reminded of the 1953 confirmation hearings for Charles E. Wilson for Secretary of Defense. Wilson had been president of GM since 1941, overseeing both the company's transformation to wartime production and then its return to peacetime. Much of the confirmation hearing revolved around how he would sell off or insulate his financial holdings from his government job--and more broadly, the difficulties of separating his role at GM from the role of Secretary of Defense.

On January 15, 1953, Wilson had this famous exchange with Senator Robert Hendrickson, a Republican from New Jersey:

I've quoted here from the transcript of the actual hearings as printed in "Nominations: Hearings before the Committee on Armed Services, United States Senate, Eighty-third Congress, first session, on nominee designates Charles E. Wilson, to be Secretary of Defense; Roger M. Kyes, to be Deputy Secretary of Defense; Robert T. Stevens, to be Secretary of the Army; Robert B. Anderson, to be Secretary of the Navy; Harold E. Talbott, to be Secretary of the Air Force ..." January 15, 1953.

But the hearing had been closed to the public, and the transcript didn't come out for a few days. When reporters asked what had been said, they were told that Wilson had simply replied: "What's good for General Motors is good for the country." Democrats picked up the phrase on the campaign trail and used it against Republicans for being overly pro-business. The highly popular Li'l Abner comic strip had a character named General Bullmoose who often said: "What's good for General Bullmoose is good for the U.S.A.!"

The story goes that for a few years, when the quotation came up, Wilson would try to offer some context, but after awhile he stopped bothering. When he stepped down as Secretary of Defense in 1957, he said: " "I have never been too embarrassed over the thing, stated either way."

Of course, it's interesting that the Democratic party that bashed Charlie Wilson back in 1953 now finds itself in the position of arguing the modern version of "what's good for General Motors is good for the country." For those who want details about the actual bailout, my May 7 post on "The GM and Chrysler Bailouts" might be a useful starting point.

Here, I would just make the point that while GM remains an enormous company today, it was relatively much larger in the 1950s. In the Fortune 500 for 1955, General Motors was far and away the biggest U.S. company ranked by sales. GM had $9.8 billion in sales in 1955, with Exxon running second at $5.6 billion, U.S. Steel third at $3.2 billion, followed by General Electric at $3 billion. The GDP of the U.S. economy in in 1955 was $415 billion, so for perspective, GM sales were 2.3% of the U.S. economy.

In 2012, GM's sales are $150 billion, but in the Fortune 500 for 2012, it now runs a distant fifth in sales among U.S. firms. The two biggest firms by sales were Exxon Mobil at $450 billion in sales, just a few billion ahead of WalMart. The GDP of the U.S. economy is about $15 trillion in 2012, so GM sales are now more like 1% of GDP, rather than 2%. And many of those who argue that it was in the national interest to give GM more favorable government-arranged bankruptcy conditions, rather than the usual bankruptcy court, would likely be quite unwilling to give bailouts to Exxon Mobil or to WalMart--despite how much larger they are.

On January 15, 1953, Wilson had this famous exchange with Senator Robert Hendrickson, a Republican from New Jersey:

"Senator Hendrickson. Mr. Wilson, you have told the committee, I think more than once this morning, that you see no area of conflict between your interest in the General Motors Corp. or the other companies, as a stockholder, and the position you are about to assume.Mr. Wilson. Yes, sir.

Senator Hendrickson. Well now, I am interested to know whether if a situation did arise where you had to make a decision which was extremely adverse to the interests of your stock and General Motors Corp. or any of these other companies, or extremely adverse to the company, in the interests of the United States Government, could you make that decision?Mr. Wilson. Yes, sir; I could. I cannot conceive of one because for years I thought what was good for our country was good for General Motors, and vice versa. The difference did not exist. Our company is too big. It goes with the welfare of the country. Our contribution to the Nation is quite considerable. I happen to know that toward the end of the war—I was coming back from Washington to New York on the train, and I happened to see the total of our country's lend-lease to Russia, and I was familiar with what we had done in the production of military goods in the war and I thought to myself, "My goodness, if the Russians had a General Motors in addition to what they have, they would not have needed any lend-lease," so I have no trouble—I will have no trouble over it, and if I did start to get into trouble I would put it up to the President to make the decision on that one. I cannot conceive of what it would be.

Senator Hendrickson. Well, frankly, I cannot either at the moment, but we never know what is in store for us.

Mr. Wilson. I cannot conceive of it. I do not think we are going to get into any foolishness like seizing the properties or anything like that, you know, like the Iranians are in over there, when they got into ---

Senator Hendrickson. I certainly hope not.

Mr. Wilson. You see, if that one came up for some reason or other then I would not like that. I do not think I would be on the job; I think I would quit because I would be so out of sympathy with trying to nationalize the industries of our country. I think it would be a terrible thing. That is about the only one I can think of. Of course, I do not think that is even a remote possibility. I think the whole trend of our country is the other way."

I've quoted here from the transcript of the actual hearings as printed in "Nominations: Hearings before the Committee on Armed Services, United States Senate, Eighty-third Congress, first session, on nominee designates Charles E. Wilson, to be Secretary of Defense; Roger M. Kyes, to be Deputy Secretary of Defense; Robert T. Stevens, to be Secretary of the Army; Robert B. Anderson, to be Secretary of the Navy; Harold E. Talbott, to be Secretary of the Air Force ..." January 15, 1953.

But the hearing had been closed to the public, and the transcript didn't come out for a few days. When reporters asked what had been said, they were told that Wilson had simply replied: "What's good for General Motors is good for the country." Democrats picked up the phrase on the campaign trail and used it against Republicans for being overly pro-business. The highly popular Li'l Abner comic strip had a character named General Bullmoose who often said: "What's good for General Bullmoose is good for the U.S.A.!"

The story goes that for a few years, when the quotation came up, Wilson would try to offer some context, but after awhile he stopped bothering. When he stepped down as Secretary of Defense in 1957, he said: " "I have never been too embarrassed over the thing, stated either way."

Of course, it's interesting that the Democratic party that bashed Charlie Wilson back in 1953 now finds itself in the position of arguing the modern version of "what's good for General Motors is good for the country." For those who want details about the actual bailout, my May 7 post on "The GM and Chrysler Bailouts" might be a useful starting point.

Here, I would just make the point that while GM remains an enormous company today, it was relatively much larger in the 1950s. In the Fortune 500 for 1955, General Motors was far and away the biggest U.S. company ranked by sales. GM had $9.8 billion in sales in 1955, with Exxon running second at $5.6 billion, U.S. Steel third at $3.2 billion, followed by General Electric at $3 billion. The GDP of the U.S. economy in in 1955 was $415 billion, so for perspective, GM sales were 2.3% of the U.S. economy.

In 2012, GM's sales are $150 billion, but in the Fortune 500 for 2012, it now runs a distant fifth in sales among U.S. firms. The two biggest firms by sales were Exxon Mobil at $450 billion in sales, just a few billion ahead of WalMart. The GDP of the U.S. economy is about $15 trillion in 2012, so GM sales are now more like 1% of GDP, rather than 2%. And many of those who argue that it was in the national interest to give GM more favorable government-arranged bankruptcy conditions, rather than the usual bankruptcy court, would likely be quite unwilling to give bailouts to Exxon Mobil or to WalMart--despite how much larger they are.

Monday, October 22, 2012

U.S. Birth Rates

Birth rates are one of those apparently dry statistics that have profound implications both for public policy and for how we live our day-to-day lives. Here are two figures from the Centers for Disease Control "National Vital Statistics Reports--Births: Preliminary Data for 2011."

Overall, U.S. birth rates are in decline (as shown by the light blue line) have fallen below the levels seen in the Great Depression, which up until recent years had been the lowest fertility level in U.S. history. The 2011 fertility rate of 63.2 per 1,000 women aged 15-44 is the lowest in U.S. history. The number of actual births is actually near a high, but that is because of the overall expansion of population.

This decline in birthrates is not evenly distributed across age groups. The figure below shows only data back to 1990. However, it illustrates that for women age 15-19 (red line), 20-24 (light blue line) and 25-29 (yellow line), birth rates have dropped noticeablyl. Although birth rates have dropped substantially for younger women, they have held steady for women ages and up. For mothers int he three age groups above 30 years, birth rates have been holding steady or rising.

These shifts in birthrates have powerful implications for our lives. Politically, the number of households with a direct personal interest in supporting programs that benefit children--because they have children under age 18 living at home--has declined. Here's a figure illustrating the long-term trend up through 2008 from Census Bureau report. Back in the late 1950s and early 1960s, about 57% of U.S. family households included children under the age of 18. The share has been dropping since then, and is now approaching 45%. When it comes to decisions about everything from school funding and public parks up to pensions, health care, and other payments to those who are retired, it makes a real difference in a majority-rule political system if the share of families with children at home is more than 50% of the population or less.

The shift in birth rates represents a dramatic change in our personal lives as well. In an article in the Fall 2003 issue of my own Journal of Economic Perspectives, economist and demographer Ronald Lee offered one point in particular that has stuck with me: "In 1800, women spent about 70 percent of their adult years bearing and rearing young children, but that fraction has decreased in many parts of the world to only about 14 percent, due to lower fertility and longer life."

More people are going through young adulthood and setting their original template for adult living without becoming parents, but then having children later into their 20s, or their 30s, or their 40s. Many people are raising children not in their 20s or 30s, but instead in their 40s and 50s. And with longer life expectancies, many people will find that while their care-giving responsibilities for children cover fewer years, their care-giving responsibilities for adult parents. Multi-generational family reunions used to follow a pattern of a few older folks of the grandparent generation, a larger number of their adult children and spouses, and then lots of children. In the future, there may be four or five generations in attendance at multigenerational reunions, and with many people having fewer or no children, the "family tree" will look taller and skinnier. We are already living our family lives in a substantially different context than earlier generations. The changes are large and getting larger, although sociologists and the science fiction novelists probably have deeper insights than do the economists into how such changes are likely to affect our personal lives.

Overall, U.S. birth rates are in decline (as shown by the light blue line) have fallen below the levels seen in the Great Depression, which up until recent years had been the lowest fertility level in U.S. history. The 2011 fertility rate of 63.2 per 1,000 women aged 15-44 is the lowest in U.S. history. The number of actual births is actually near a high, but that is because of the overall expansion of population.

This decline in birthrates is not evenly distributed across age groups. The figure below shows only data back to 1990. However, it illustrates that for women age 15-19 (red line), 20-24 (light blue line) and 25-29 (yellow line), birth rates have dropped noticeablyl. Although birth rates have dropped substantially for younger women, they have held steady for women ages and up. For mothers int he three age groups above 30 years, birth rates have been holding steady or rising.

These shifts in birthrates have powerful implications for our lives. Politically, the number of households with a direct personal interest in supporting programs that benefit children--because they have children under age 18 living at home--has declined. Here's a figure illustrating the long-term trend up through 2008 from Census Bureau report. Back in the late 1950s and early 1960s, about 57% of U.S. family households included children under the age of 18. The share has been dropping since then, and is now approaching 45%. When it comes to decisions about everything from school funding and public parks up to pensions, health care, and other payments to those who are retired, it makes a real difference in a majority-rule political system if the share of families with children at home is more than 50% of the population or less.

The shift in birth rates represents a dramatic change in our personal lives as well. In an article in the Fall 2003 issue of my own Journal of Economic Perspectives, economist and demographer Ronald Lee offered one point in particular that has stuck with me: "In 1800, women spent about 70 percent of their adult years bearing and rearing young children, but that fraction has decreased in many parts of the world to only about 14 percent, due to lower fertility and longer life."

More people are going through young adulthood and setting their original template for adult living without becoming parents, but then having children later into their 20s, or their 30s, or their 40s. Many people are raising children not in their 20s or 30s, but instead in their 40s and 50s. And with longer life expectancies, many people will find that while their care-giving responsibilities for children cover fewer years, their care-giving responsibilities for adult parents. Multi-generational family reunions used to follow a pattern of a few older folks of the grandparent generation, a larger number of their adult children and spouses, and then lots of children. In the future, there may be four or five generations in attendance at multigenerational reunions, and with many people having fewer or no children, the "family tree" will look taller and skinnier. We are already living our family lives in a substantially different context than earlier generations. The changes are large and getting larger, although sociologists and the science fiction novelists probably have deeper insights than do the economists into how such changes are likely to affect our personal lives.

Friday, October 19, 2012

Can Agricultural Productivity Keep Growing?

As the world population continues to climb toward a projected population of 9 billion or so by mid-century, can agricultural productivity keep up? Keith Fuglie and Sun Ling Wang offer some thoughts in "New Evidence Points to Robust But Uneven Productivity Growth in Global Agriculture," which appears in the September issue of Amber Waves, published by the Economic Research Service at the U.S. Department of Agriculture.

Food prices have been rising for the last decade or so. Fuglie and Wang offer a figure that offers some perspective. The population data is from the U.N, showing the rise in world population from about 1.7 billion in 1900 to almost 7 billion in 2010. The food price data is a a weighted average of 18 crop and livestock prices, where the prices are weighted by the share of agricultural trade for each product. Despite the sharp rise in demand for agricultural products from population growth and higher incomes, the rise in productivity of the farming sector has been sufficient so that the price of farm products fell by 1% per year from 1900 to 2010 (as shown by the dashed line).

What are some main factors likely to affect productivity growth in world agriculture in the years ahead? Here are some of the reactions I took away from the paper.

Many places around the world are far behind the frontier of agricultural productivity, and thus continue to have considerable room for catch-up growth. "Southeast Asia, China, and Latin America are now approaching the land and labor productivity levels achieved by today's industrialized nations in the 1960s."

The rate of output growth in agriculture hasn't changed much, but the sources of that output growth have been changing from a higher use of inputs (machinery, irrigation, fertilizer) and toward a higher rate of productivity growth. "Global agricultural output growth has remained remarkably consistent over the past five decades--2.7 percent per year in the 1960s and between 2.1 and 2.5 percent average annual growth in each decade that followed. ... Between 1961 and 2009, about 60 percent of the tripling in global agricultural output was due to increases in input use, implying that improvements in TFP accounted for the other 40 percent. TFP's share of output growth, however, grew over time, and by the most recent decade (2001-09), TFP accounted for three-fourths of the growth in global agricultural production."

There's no denying that feeding the global population as it rises toward nine billion will pose some real challenges, but it is clearly within the realm of possibility. For more details on how it might be achieved, here's my post from October 2011 on the subject of "How the World Can Feed 9 Billion People."

Food prices have been rising for the last decade or so. Fuglie and Wang offer a figure that offers some perspective. The population data is from the U.N, showing the rise in world population from about 1.7 billion in 1900 to almost 7 billion in 2010. The food price data is a a weighted average of 18 crop and livestock prices, where the prices are weighted by the share of agricultural trade for each product. Despite the sharp rise in demand for agricultural products from population growth and higher incomes, the rise in productivity of the farming sector has been sufficient so that the price of farm products fell by 1% per year from 1900 to 2010 (as shown by the dashed line).

What are some main factors likely to affect productivity growth in world agriculture in the years ahead? Here are some of the reactions I took away from the paper.

Many places around the world are far behind the frontier of agricultural productivity, and thus continue to have considerable room for catch-up growth. "Southeast Asia, China, and Latin America are now approaching the land and labor productivity levels achieved by today's industrialized nations in the 1960s."

The rate of output growth in agriculture hasn't changed much, but the sources of that output growth have been changing from a higher use of inputs (machinery, irrigation, fertilizer) and toward a higher rate of productivity growth. "Global agricultural output growth has remained remarkably consistent over the past five decades--2.7 percent per year in the 1960s and between 2.1 and 2.5 percent average annual growth in each decade that followed. ... Between 1961 and 2009, about 60 percent of the tripling in global agricultural output was due to increases in input use, implying that improvements in TFP accounted for the other 40 percent. TFP's share of output growth, however, grew over time, and by the most recent decade (2001-09), TFP accounted for three-fourths of the growth in global agricultural production."

Sub-Saharan Africa has perhaps the greatest need for a productivity surge, because of low incomes and expected rates of future population growth, but is hindered by a lack of institutional capacity to sustain the mix of public- and private-sector agricultural R&D that benefits local farmers. "Sub-Saharan Africa faces perhaps

the biggest challenge in achieving sustained, long-term

productivity growth in agriculture. ... Raising

agricultural productivity growth in Sub-Saharan Africa will likely

require significantly higher public and private investments,

especially in agricultural research and extension, as well as

policy reforms to strengthen incentives for farmers.

Perhaps the single, most important

factor separating countries that have successfully sustained